13 Core Software Testing Best Practices Checklist

Discover best practices for optimizing software testing to ensure the high quality of your software through effective planning, test design, automation, and bug reporting.

The world of programming and technology is never static, as every month brings new game-changing architecture solutions, tools, and software platforms. The same goes for industry standards as well, that’s why adhering to best practices in software testing should be obligatory for QA specialists.

In this guide, the DogQ team prepared key practices that can help you achieve thorough and efficient testing, ensuring high-quality software delivery.

1. Understanding Requirements & Defining Clear Objectives

Effective software testing begins long before the first test case is executed. Understanding the requirements and defining clear objectives are critical steps in setting the foundation for a successful testing process.

Below, we compiled a short table for successful testing requirements and objectives setting:

By thoroughly preparing in these areas, you ensure that your testing efforts are aligned with the project’s goals and that you can achieve comprehensive and effective testing outcomes.

2. Plan the Testing and QA Processes

One more important part is planning, as it provides a structured roadmap that ensures all testing activities are conducted efficiently and effectively. A well-crafted test plan serves as a formal guide for all stakeholders, facilitating clear communication and setting precise expectations.

Here’s a detailed approach to planning:

1. Write Up a Formal Test Plan: this document should include all critical aspects such as the testing strategy, required tools, roles and responsibilities, and timelines;

2. Set SMART Goals and Objectives: the goals and objectives of the test plan should be SMART – Specific, Measurable, Achievable, Relevant, and Time-bound;

3. Identify and Document Testing Resources: determine the resources required for the testing process, including tools, environments, and personnel. Document the availability and allocation of these resources to avoid any potential bottlenecks or delays;

4. Define Testing Scope and Strategy: outline the scope of the testing activities, including the features to be tested, the types of testing to be performed (e.g., functional, performance, security), and the testing environments. Develop a strategy that includes the approach for test case design, execution, and defect management;

5. Discuss and Review the Plan: share the test plan with all relevant stakeholders, including the development team, project managers, and business analysts.

6. Get Ready for Execution: once the plan is finalized, prepare for the execution phase by setting up the necessary testing environments, configuring tools, and training the testing team as required.

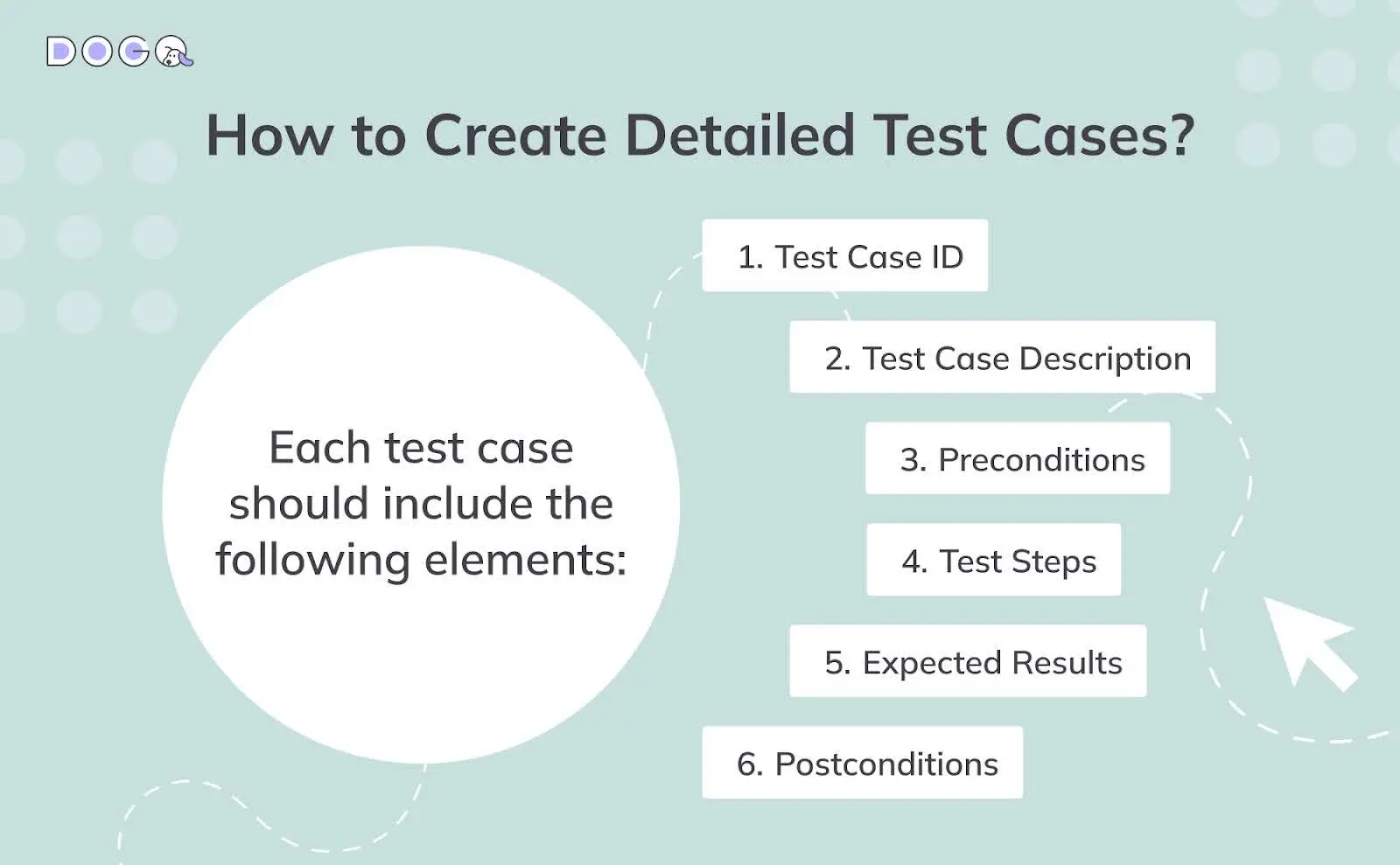

3. Test Case Designing

Detailed test cases help to systematically evaluate how the software performs under various conditions, from simple interactions to complex scenarios.

Approach:

1. Define Objectives: determine what functionality or aspect of the software the test case is intended to validate;

2. Write Up Detailed Test Cases: each test case should include the following elements:

- ID: Assign a unique identifier for easy tracking and reference;

- Description: Provide a concise description of the functionality being tested;

- Preconditions: Specify any setup or conditions that must be met before the test case can be executed;

- Test Steps: Outline the exact steps to be followed during the test execution. Ensure these steps are clear and easy to understand to avoid ambiguity;

- Expected Results: Define the expected outcome for each step and the overall test case. This serves as the benchmark against which the actual results will be compared;

- Postconditions: Document the state of the system after test execution, including any changes or updates made during the testing process.

3. Include a Variety of Scenarios: design test cases that encompass both positive and negative scenarios. Positive scenarios should validate that the software behaves as expected under normal conditions, while negative should test how the system handles invalid inputs or error conditions.

4. Ensure Reusability and Maintainability: create modular test cases that can be reused in different test scenarios or test cycles, and regularly review and update them;

6. Check and Validate Test Cases: collaborate with developers and business analysts, to review and validate test cases. Ensure that they cover all relevant functionality and accurately reflect user requirements.

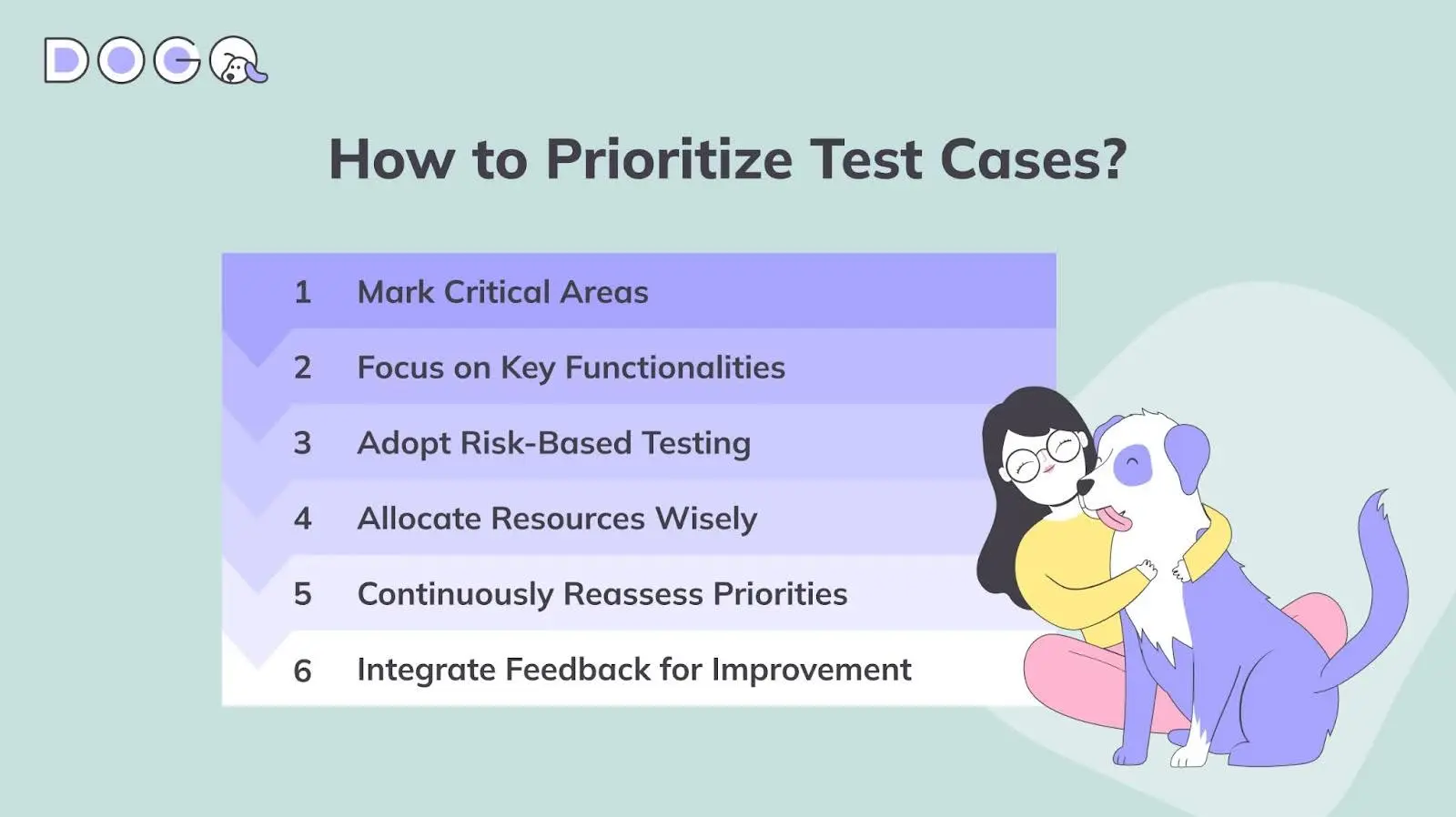

4. Prioritize Tests

Prioritizing testing is a strategic approach that focuses on addressing the most critical and high-risk areas of the software first. This method ensures that the most crucial components are rigorously tested, which is essential for maintaining product quality and reliability.

1. Mark Critical Areas: start by identifying the software’s most critical areas. These are typically components that are fundamental to the application’s core functionality (features that handle sensitive data, perform essential operations, or are frequently used by end-users should be prioritized);

2. Focus on Key Functionalities: Ensure that key functionalities and user workflows are thoroughly tested (scenarios that are critical to achieving the software’s primary goals and those that could significantly affect user satisfaction);

3. Adopt Risk-Based Testing: Use a risk-based testing approach where test cases are prioritized based on their risk level and potential impact on the software;

4. Allocate Resources Wisely: Allocate more time and effort to testing high-risk components while ensuring that lower-risk areas still receive adequate attention;

6. Continuously Reassess Priorities and Integrate Feedback for Improvement: Changes in requirements, emerging issues, or updates to the software may shift the focus of testing priorities. Use feedback from previous testing phases to refine testing priorities and focus areas.

5. Use Test-Oriented Software Development Practices

Test-oriented development practices are also an essential part of good software testing practices, as they foster a mindset that prioritizes quality and reduces the number of bugs discovered during later testing stages.

1. Pair Programming: in pair programming, two developers collaborate at one workstation. One programmer writes the code while the other observes, reviews, and provides suggestions. The constant feedback loop enhances code quality by catching mistakes and bugs early in the development process, ultimately leading to more robust and reliable software.

2. Test-Driven Development (TDD): this is a methodology where tests are written before the actual code. The process begins by creating a test that initially fails, as the required functionality is not yet implemented. Developers then write the minimal code necessary to pass the test and subsequently refactor the code to improve its quality. This ensures that the code meets the defined requirements and that the functionality is thoroughly tested, reducing the likelihood of defects and improving overall code quality.

3. Behavior-Driven Development (BDD): it extends TDD by focusing on the behavior of the application from the user’s perspective. It involves writing tests in a natural language format that describes the behavior of the system in specific scenarios. BDD encourages collaboration between developers, testers, and business stakeholders, ensuring that the software meets user expectations and behaves as intended.

4. Continuous Integration (CI): it involves regularly merging code changes into a shared repository and running automated tests to detect integration issues early. By integrating code frequently and validating it through automated testing, CI practices help identify conflicts and defects promptly.

5. Automated Testing: it involves using scripts and tools to execute test cases automatically, reducing the need for manual testing. Automated tests can cover a wide range of scenarios, including regression, performance, and load testing.

6. Use a Shift-Left Approach to Start Testing Early and Often

The shift-left approach to testing emphasizes integrating testing activities throughout the entire software development lifecycle, rather than concentrating them in a single phase at the end. Being among the best practices for testing software, this strategy involves performing tests early and continuously, aiming for ongoing verification of the software’s quality from the outset of development.

To effectively implement the shift-left approach, follow a structured plan-execute-organize flow:

- Plan: Develop a comprehensive testing strategy that outlines the scope, objectives, and methodologies for early testing. Incorporate testing activities into the overall development plan and establish clear milestones for continuous verification.

- Execute: Perform testing continuously as development progresses. Use automated tests, unit tests, and integration tests to validate code changes and ensure that defects are identified and addressed promptly.

- Organize: Maintain an organized approach to testing by tracking test results, managing test cases, and documenting defects. Utilize test management tools to streamline the process and facilitate effective communication among team members.

Adopting a shift-left approach ensures that testing is an integral part of the development process, leading to higher quality software, reduced time and costs, and improved collaboration. By starting testing early and often, you can achieve a smoother development workflow and deliver a product that meets user expectations and quality standards.

7. Automate Testing Where Possible

Automation plays a crucial role in modern software development by accelerating the testing process and enhancing overall efficiency. Leveraging automated testing tools allows you to execute a large volume of tests rapidly, which is especially beneficial for complex and extensive projects. This speed results in shorter development cycles and faster time-to-market for software products.

Key Benefits of Automation:

1. Speed and Efficiency: Automated tests can run numerous test cases in a fraction of the time it would take to execute them manually. This speed is invaluable for large-scale projects where quick feedback and iteration are essential;

2. Consistency and Accuracy: Automation ensures that tests are executed precisely the same way each time they are run. This consistency eliminates the variability associated with manual testing and provides reliable, repeatable results;

3. Continuous Testing Integration: Incorporating automation into the development cycle facilitates continuous testing, where tests are performed automatically as code changes are made. This integration helps in detecting issues early, improving code quality, and enabling faster resolutions;

4. Efficiency in Repetitive Tasks: Automated testing excels at handling repetitive tasks, such as regression tests, which need to be executed frequently throughout the development process. Automating these tasks frees up valuable resources and allows manual testers to focus on more complex, exploratory testing scenarios;

5. Better Coverage: Automation enables extensive test coverage by executing a wide range of test cases and scenarios that might be impractical to perform manually. This comprehensive testing approach helps identify potential issues that could otherwise go unnoticed.

Manual testing is particularly valuable for nuanced, exploratory testing, and scenarios that require human judgment and intuition. A balanced approach, combining automated and manual testing, ensures thorough and effective quality assurance.

8. Leverage AI/ML and Low-Code/No-Code Testing Platforms

To stay ahead in today’s fast-evolving software landscape, it’s essential to embrace the latest innovations in testing technology. Two of the most impactful trends are artificial intelligence/machine learning (AI/ML) and low-code/no-code testing platforms.

Artificial Intelligence and Machine Learning in Testing

AI and ML are transforming software testing by automating complex tasks and making test processes smarter:

- Smart Test Case Generation:

AI analyzes user behavior, logs, and requirements to automatically generate comprehensive test scenarios, including edge cases that might be overlooked manually. - Self-Healing Test Automation:

AI-powered scripts can adapt to changes in the user interface (such as new element IDs), reducing maintenance time and effort. - Defect Prediction and Root Cause Analysis:

ML algorithms predict high-risk code areas using historical data, enabling targeted testing and faster bug resolution. - Visual Validation Testing:

Tools like Applitools and Percy use computer vision to detect UI inconsistencies across devices and browsers at pixel-level accuracy.

Low-Code and No-Code Testing Platforms

These platforms democratize test automation, making it accessible to non-technical team members and accelerating release cycles:

- Drag-and-Drop Interfaces:

Platforms such as Katalon Studio and BugBug allow users to build tests quickly using pre-built components. - Cross-Platform Support:

Run tests on thousands of device-browser-OS combinations via cloud-based platforms. - CI/CD Integration:

Low-code/no-code tools integrate seamlessly with continuous integration and delivery pipelines, streamlining the testing process.

Implementation Tips:

- Combine AI-powered tools for intelligent analysis with low-code platforms for rapid test creation.

- Train business analysts and other non-developers to create and maintain tests, freeing up developers for more complex tasks.

- Use anomaly detection systems to monitor performance metrics in real time.

By adopting these modern approaches, teams can achieve faster releases, higher test coverage, and more reliable software quality.

9. Keep Tests Independent and Repeatable

Ensuring that your tests are independent and repeatable is a crucial part of software testing best practices. This practice enhances the overall quality and trustworthiness of the testing process, enabling more informed decisions about the software’s readiness for production:

1. Higher Reliability: Independent tests are designed to operate in isolation from each other, ensuring that the outcome of one test does not impact another;

2. Simplified Debugging: When a test fails, having independent tests makes it easier to pinpoint the exact area of failure. Since each test focuses on a specific functionality or component, any issues detected are directly associated with that particular area;

3. Better Test Maintenance: Tests that are independent and repeatable are more straightforward to maintain and update. Changes in one part of the system or application are less likely to require extensive modifications to the entire test suite;

4. Consistent Results: Repeatable tests ensure that the same test can be executed multiple times with consistent results. This consistency is crucial for tracking changes over time and validating that fixes or updates have not introduced new issues;

5. Efficient Resource Utilization: Independent and repeatable tests enable more efficient use of testing resources. With well-defined, isolated tests, you can allocate testing resources more effectively and avoid redundant testing efforts.

10. Test on Real Devices

While simulators and emulators offer a convenient way to conduct testing, they cannot fully replicate the nuances of real-world conditions that end-users will experience. Testing on real devices provides a more accurate reflection of how the system, application, or bot will perform in diverse, real-life scenarios.

Key Advantages of Testing on Real Devices:

1. Authentic User Experience: Simulators and emulators often fail to mimic the exact user experience, including device-specific quirks and environmental factors. Testing on actual devices allows developers to observe how the software behaves in real-world conditions, such as varying battery levels, network speeds, and hardware variations;

2. Identification of Device-Specific Issues: Real devices expose issues that may not be apparent in a simulated environment. For example, certain bugs or performance issues might only occur due to specific device configurations or hardware limitations. Testing on real devices helps identify and address these device-specific problems before the software is released;

3. Accurate Performance Metrics: Performance can vary significantly between real devices and simulated environments. Testing on actual devices provides precise metrics on application speed, responsiveness, and resource utilization, ensuring that performance benchmarks reflect real user experiences;

4. Dealing with Real-World Scenarios: Real devices encounter various scenarios that are difficult to replicate in a simulation, such as pop-ups, background processes, and network fluctuations. Testing on physical devices helps developers understand how the application handles these real-world conditions, leading to more robust and reliable software.

11. Testing Metrics Practice

Effective use of measurement metrics is crucial for assessing the results and impact of testing efforts. To leverage testing metrics effectively, follow these practices:

1. Choose the Right Metrics: Select metrics that align closely with your business goals and objectives. Ensure that the metrics you choose are relevant to your specific testing needs and can be accurately tracked using your current infrastructure.

Key metrics might include:

- Defect density;

- Test coverage;

- Test pass rate;

- Defect resolution time.

2. Set Realistic Targets: Establish achievable targets for each metric, recognizing that aiming for 100% may not be practical for many metrics. Setting realistic and attainable targets ensures that your goals are both challenging and achievable, providing a clear benchmark for success;

3. Use Metrics in Tandem: Rely on a combination of metrics rather than a single metric to gain a comprehensive understanding of your testing performance. No single metric can provide a complete picture: using multiple metrics together will offer a more nuanced view of your testing effectiveness and quality;

4. Collect Feedback and Improve KPIs: Gather feedback from team members who interact with the metrics, as they may identify shortcomings or suggest improvements. Regularly review and refine your key performance indicators (KPIs) based on this feedback to ensure they remain relevant and effective;

5. Ensure Understanding and Compliance: Make sure that all stakeholders involved in the testing process understand the chosen metrics and the targets set. Ensure that everyone follows the established metrics cycle and integrates them into their testing practices to maintain consistency and alignment with business objectives.

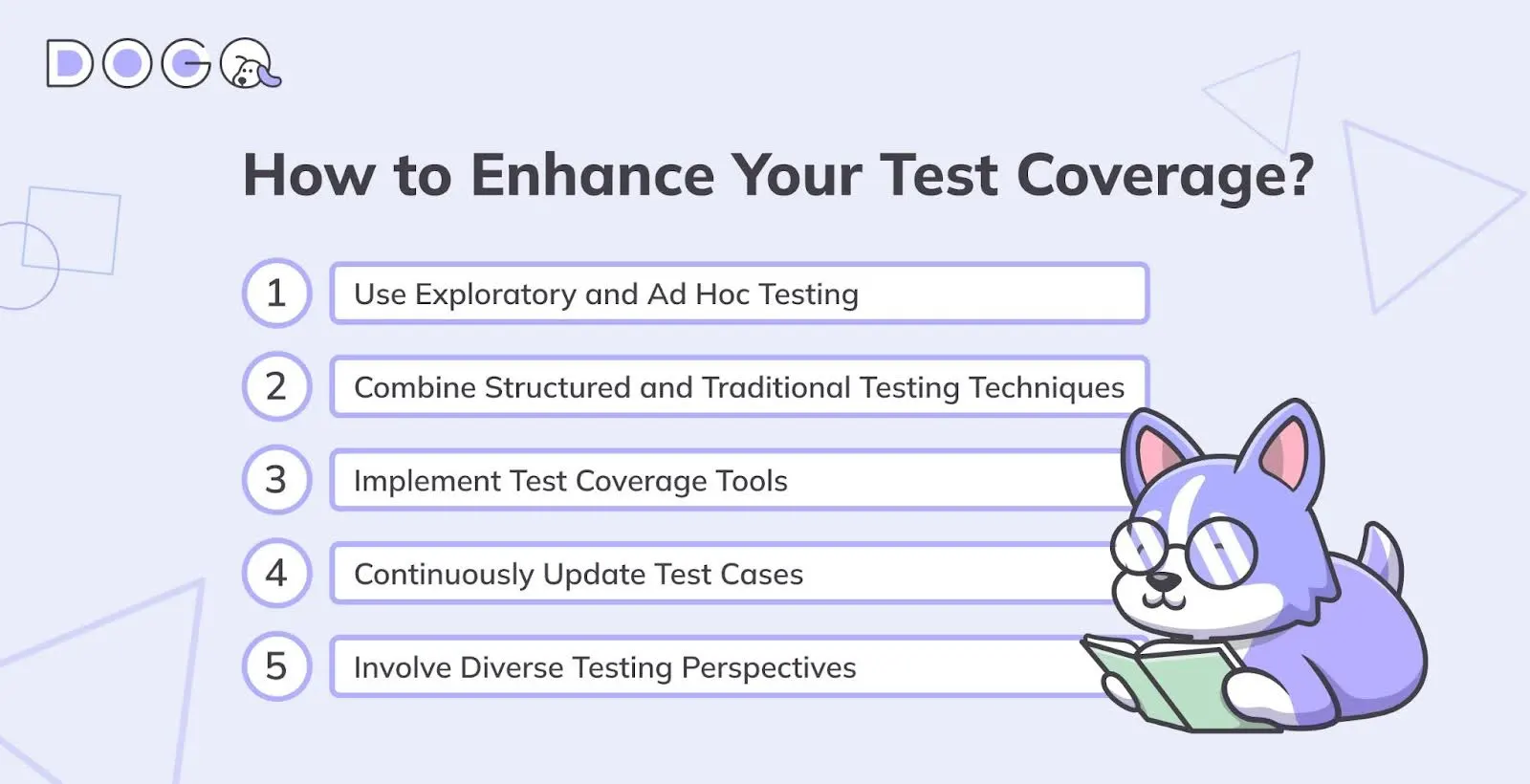

12. Enhancing Test Coverage

Expanding test coverage ensures that a broader range of scenarios and potential issues are addressed, leading to a more reliable and robust software product.

Here’s how to enhance test coverage effectively:

1. Use Exploratory and Ad Hoc Testing: Utilize exploratory and ad hoc testing to uncover edge cases and unexpected behaviors that may not be covered by standard test scenarios;

2. Combine Structured and Traditional Testing Techniques: While structured testing ensures that predefined scenarios and requirements are systematically evaluated, traditional techniques, such as exploratory testing, provide additional coverage by allowing testers to investigate beyond the planned test cases;

3. Implement Test Coverage Tools: These tools can help identify gaps in your test cases, ensuring that all critical areas of the software are thoroughly tested. Coverage metrics, such as code coverage, branch coverage, and path coverage, provide valuable insights into the extent of your testing efforts;

4. Continuously Update Test Cases: Regularly review and update your test cases to reflect changes in the software and its requirements. As new features are added and existing features are modified, ensure that your test cases are adjusted accordingly to maintain comprehensive coverage;

5. Involve Diverse Testing Perspectives: Encourage input from various team members, including developers, testers, and end-users, to gain different perspectives on potential areas of concern. This collaborative approach helps identify diverse scenarios and enhance the overall test coverage.

13. Report Bugs Effectively

An effective bug report is crucial for efficient software testing, providing clear communication between QA specialists and developers. Here’s how to craft an effective bug report:

1. Offer Solutions When Possible: Include not just the details of the bug but also potential solutions or suggestions for fixing it. Describe the expected behavior of the feature to guide developers towards the desired outcome;

2. Reproduce the Bug Before Reporting: Ensure the bug is reproducible before reporting it. Provide a detailed, step-by-step guide on how to reproduce the issue, specifying the context and avoiding ambiguous information. Even if the bug appears intermittently, document it thoroughly;

3. Ensure Clarity: A bug report should be clear and concise, helping developers understand the issue without confusion. Include details on what was observed versus what was expected. Address only one issue per report to avoid conflating multiple problems;

4. Include Screenshots: Attach screenshots that highlight the defect. Visual evidence can significantly aid developers in understanding the issue and speed up the resolution process;

5. Provide a Bug Summary: Write a precise summary of the bug to quickly convey its nature. This helps in fast identification and classification of the issue in bug tracking systems, as bug IDs and descriptions are often difficult to remember.

Best Practices for Software Testing: Wrapping Up

Implementing good software testing practices is essential for ensuring high-quality, reliable software. By understanding requirements and defining clear objectives, planning thoroughly, designing comprehensive test cases, and prioritizing critical areas, you set a solid foundation for effective testing. Adopting test-oriented development practices, leveraging a shift-left approach, and automating where possible further enhance your testing efforts.

Maintaining independent and repeatable tests, testing on real devices, and using metrics to guide your processes are crucial for achieving robust and dependable software. Enhancing test coverage through various techniques and reporting bugs effectively ensures that issues are identified and addressed promptly.

Adhering to these best practices not only improves the accuracy and efficiency of your testing processes but also contributes to the overall success and quality of your software projects. By continuously refining your approach and integrating these strategies, you position your team to deliver superior software products that meet and exceed user expectations.

Related Posts:

🪄 Let Tests Fix Themselves: Self-Healing Test Automation: Top 5 Examples

An Ultimate User Interface Testing Guide + UI Testing Checklist. We All Like to Talk About Seamless User Experience

Top 8 Key Test Automation Metrics. Get Insights on How to Boost Your Effectiveness

End to End Testing Guide for 2025. Learn Best Practices for Broader Coverage

A Form Testing Checklist + Tips for Complete Automation with Perfect Tools

Software Testing Best Practices Checklist. Achieve Thorough and Efficient Testing